Fooling Neural Networks in the Physical World with 3D Adversarial Objects

31 Oct 2017 · 3 min read — shared on Hacker News, Lobsters, Reddit, TwitterWe’ve developed an approach to generate 3D adversarial objects that reliably fool neural networks in the real world, no matter how the objects are looked at.

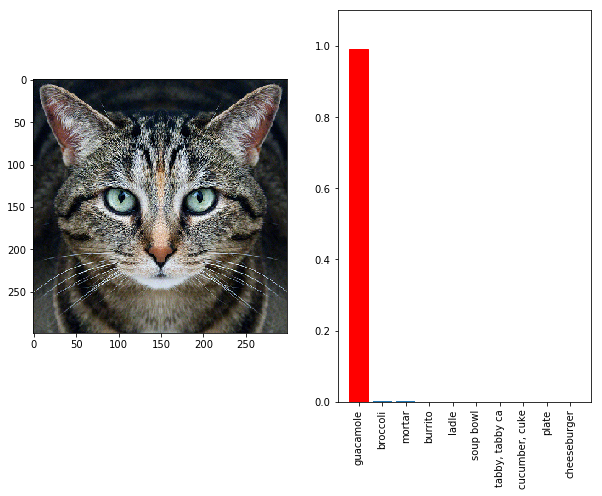

Neural network based classifiers reach near-human performance in many tasks, and they’re used in high risk, real world systems. Yet, these same neural networks are particularly vulnerable to adversarial examples, carefully perturbed inputs that cause targeted misclassification. One example is the tabby cat below, which we perturbed to look like a guacamole to Google’s InceptionV3 image classifier.

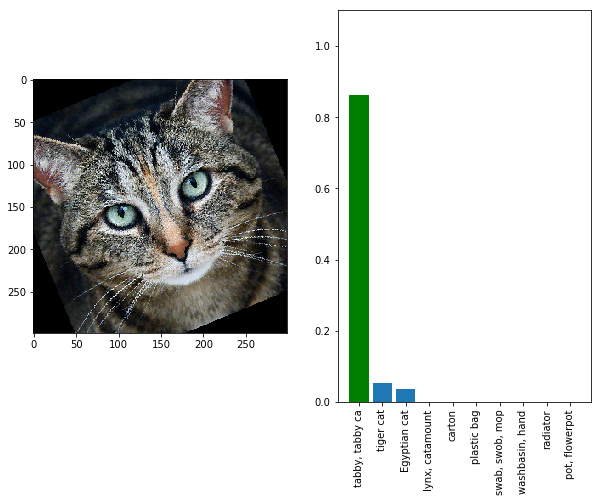

However, adversarial examples generated using standard techniques break down when transferred into the real world as a result of zoom, camera noise, and other transformations that are inevitable in the physical world. Here is the same image as before, but rotated slightly: it is now classified correctly as a tabby cat.

Since the discovery of this lack of transferability, some of the work on adversarial examples has dismissed the possibility of real-world adversarial examples, even in the case of two-dimensional printouts — despite prior work by Kurakin et al., who printed out 2D adversarial examples that remained adversarial in the single viewpoint case. Our work demonstrates that adversarial examples are a significantly larger problem in real world systems than previously thought.

Here is a 3D-printed turtle that is classified at every viewpoint as a “rifle” by Google’s InceptionV3 image classifier, whereas the unperturbed turtle is consistently classified as “turtle”.

We do this using a new algorithm for reliably producing adversarial examples that cause targeted misclassification under transformations like blur, rotation, zoom, or translation, and we use it to generate both 2D printouts and 3D models that fool a standard neural network at any angle.

Our process works for arbitrary 3D models - not just turtles! We also made a baseball that classifies as an espresso at every angle! The examples still fool the neural network when we put them in front of semantically relevant backgrounds; for example, you’d never see a rifle underwater, or an espresso in a baseball mitt.

All the photos above fool the classifier!

See https://www.labsix.org/papers/#robustadv for details on our approach and results.

Acknowledgements

Special thanks to John Carrington / ZVerse, Aleksander Madry, Ilya Sutskever, Tatsu Hashimoto, and Daniel Kang.